Singularity v2

As mentioned in a previous post I have a raspberry pi as a media center. Unfortunately both external drives started making clicking noises. So as a precaution I turned it off and started thinking about a replacement.

My requirements for Singularity V2 were pretty simple. I want it to run the same services as I had on the pi, in addition to some new ones like nextcloud . Next I want this machine to offer enough expand- and upgradability for the foreseeable future. To me that means lots of space for hard drives. Adding more RAM in the future would be nice too. The choice came down to building a computer and running unraid or a buy a Synology NAS.

I decided to build a computer myself. Mostly because I could get more processing power for my money. Though depending on exactly what you want from a NAS, Synology could perfectly fulfill those wishes. The rest of this post will walk you through how I went about building my NAS and all the problems I had to solve.

The Parts

| Part | Price |

|---|---|

| Intel Core i5-11400 | €199.95 |

| 2x Seagate IronWolf 8TB | €459.70 |

| Gigabyte B560M H | €88.99 |

| Corsair 16GB (2x 8) DDR4 3200Mhz | €63.00 |

| Fractal Design Node 304 | €74.90 |

| EVGA SuperNova 550 G3 80+ gold | €64.95 |

| Total | €951.49 |

I wanted the server to be able to do video transcoding but didn’t want to buy a separate graphics card for it. I went with an Intel CPU because of the QuickSync support. I went with the i5-11400 because it was powerful enough to potentially do multiple transcodes while being around what I wanted to pay for a CPU. I hope it stays fast enough for a long time.

I went with 16GB of RAM because I assumed it would be enough. All my laptops also have 16 gigs and I never felt like I was restricted in any way. I picked Corsair because it is a reputable brand. I know that Nextcloud can be heavy on memory so the more the better in this case. I can always upgrade should I need more.

Because I plan on running Unraid and the first drive is not usable as storage because It is used as the parity disk, I chose 8TB Seagate IronWolf drives. They were the biggest capacity that fit my budget if I bought two of them and are made for use in a NAS.

The last important part is the case. I chose the Fractal Design Node 304. The biggest draw for me was the ability to hold six hard drives, which should give me plenty of storage.

Now the observant among you might have spotted the mistake I made choosing the parts. The motherboard I bough was a standard ITX board, but the case only supports mini-ITX. I only noticed this as I was positioning the motherboard in the case and wondering why it didn’t fit. Unfortunately this was the first mistake of many.

I send the board back and bought a new one that did fit. The Gigabyte B560I AORUS PRO AX. I chose this board because it supports all the other parts I already had. Additionally it has 4 SATA ports. I was almost twice as expensive but I wanted it as soon as possible so it was worth it. That brings the part list to the following.

| Part | Price |

|---|---|

| Intel Core i5-11400 | €199.95 |

| 2x Seagate IronWolf 8TB | €459.70 |

| Gigabyte B560I AORUS PRO AX | €161.00 |

| Corsair 16GB (2x 8) DDR4 3200Mhz | €63.00 |

| Fractal Design Node 304 | €74.90 |

| EVGA SuperNova 550 G3 80+ gold | €64.95 |

| Total | €1023.50 |

When the board was delivered I assembled the computer. After some problems connecting the front panel to the motherboard, it turned on! Now I could get on with setting up Unraid.

I had a couple of USBs laying around and I tried to make the Unraid usb with that. I had some problems getting the live usb made on linux. In the end I figured out you need to copy the files over to it, rename the drive to UNRAID, unmount it, and run sudo bash make_bootable_linux from somewhere other than the drive. After messing around in the bios I got it to consistently boot from the usb, but it wasn’t reachable from the browser. I found out that the drive was not good enough, and probably didn’t have a guid. Which makes sense because the were generic USBs. I bought a SanDisk Cruzer Fit 2.0 because it’s a brand I saw recommended in several threads. With this USB everything went fine and I could set up Unraid.

Setting up Unraid

I used this video as a guide for setting up Unraid. I made shares for series, movies, music, backup, and documents for nextcloud. From there on it was a matter of transferring the data from the external drives to Unraid. At first I tried to transfer everything by navigating to the share in my file manager and copying everything to the share. The transfer rate was around 100MB/s, which was too slow for the amount of data I was copying. After some googling I found the Unassigned Devices plugin which allows you to mount drives that are not part of your array. Mounting the drives this way doubled the transfer speed. I got all my data on the array in less than two days.

After excitedly talking to people about my new Unraid server someone mentioned I should have gotten a cache drive because it really helps with performance. You can run all the containers and VMs from the cache drive while using the hard drives for mass storage. After some lamenting about which configuration to use, 1x 1TB for more storage, or 2x 500GB for redundancy, I went with two M.2 500gb PCIe 3 drives from Samsung. I went with Samsung because it is a reputable brand. The motherboard has two M.2 slots so I wanted to take advantage of that. The cache is now redundant, so should one fail I won’t lose any data. The lower speeds of PCIe 3 are not a problem because the network is not fast enough to saturate the read or write speed of the drives. Going with these was a good cost saver for an expense I thought would come later. That brings the final part list to the following.

| Part | Price |

|---|---|

| Intel Core i5-11400 | €199.95 |

| 2x Seagate IronWolf 8TB | €459.70 |

| Gigabyte B560I AORUS PRO AX | €161.00 |

| Corsair 16GB (2x 8) DDR4 3200Mhz | €63.00 |

| Fractal Design Node 304 | €74.90 |

| EVGA SuperNova 550 G3 80+ gold | €64.95 |

| 2x Samsung 980 500GB M.2 SSD | €119.80 |

| Total | €1143.30 |

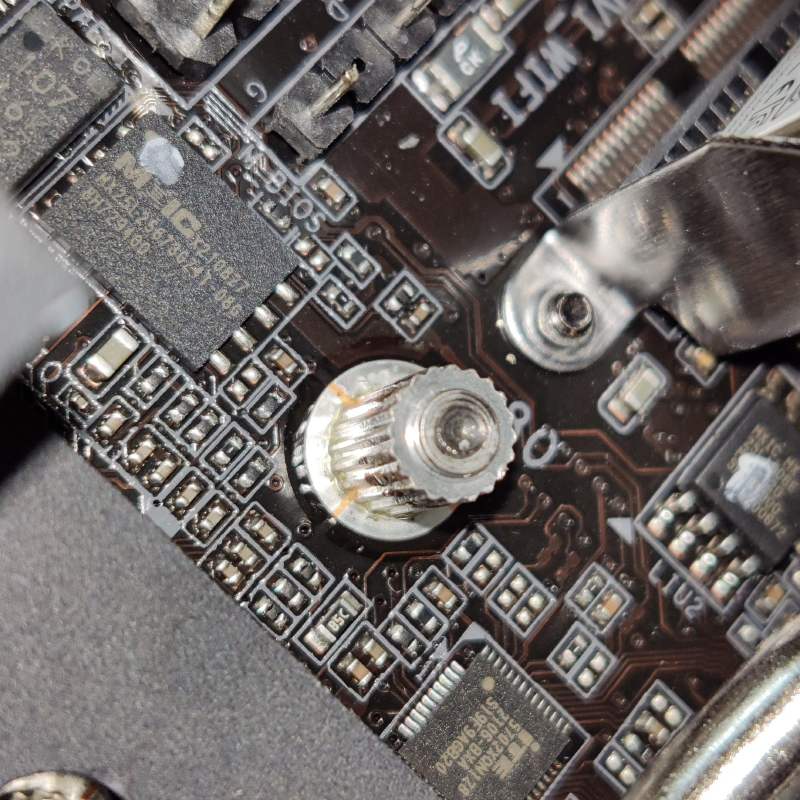

Unfortunately that wasn’t the end of my bad luck. I watched a video on how to install the M.2 drive for my motherboard so there wouldn’t be any surprises. I removed the cosmetic shied and the heat sink from the board and installed the M.2 drive. Removed the tape from the heat sink and put everything back. When installing the M.2 on the back of the motherboard I noticed that there was still a sticker on the drive. I forgot to remove that from the first I installed, so I had to take it out to remove it. The screw was suspiciously loose. Turns out that it had broken and was now stuck in the motherboard. Not knowing what I could do to fix it I called it a night.

The next day I called the support line of the website I bought it from, azerty.nl . They told me that I have three options. First, find someone brave enough to remove the screw from the motherboard. Second, send it in to them and have them look at it. Lastly, just glue it down with superglue. “If it’s stupid and it works, it isn’t stupid”, their words.

I had already thrown away the anti-static bag the motherboard came in, so sending it back was not really an option. So I started the search for a computer repair shop that would take a shot at this. The first I called said “sure no problem, bring it in”. So the next weekend I went there. When they looked at it they said it was too big of a risk and wouldn’t do it. After that I walked through the city trying several more shops. None could fix it or even tried. At the end I found an Apple repair shop that had the proper tools and took a look at it. Unfortunately the screw was completely stuck.

Since sending back wasn’t an option I went with superglue. I don’t expect to remove the drive any time soon. My dad assure me that removing something that has been glued down with superglue is possible, so we’ll see. It’s a bridge I will cross when I get to it. For now it works, so it isn’t stupid!

system setup

I wanted to setup everything I had running on the old server. A friend pointed me to the Community Application (CA) plugin for unraid. That made installing the docker containers extremely easy. In the future I would like to have an infrastructure as code solution for this. I would have like to set it up with docker-compose like I did in the past, or experiment with ansible . I guess I could always run docker in a vm and set that up programmatically. But that is a worry for the future.

Everything went swimmingly except for the hardware acceleration for transcoding with jellyfin. I read their documentation and found out I needed to pass /dev/dri/ to the container so it could access the integrated GPU. Only problem was that the directory didn’t exist. I had to install the intel-gpu-top plugin from the CA. That made the directory appear, but transcoding still didn’t work. Next I tried updating the ffmpeg version. Jellyfin has a fork of ffmpeg they use that includes patches. The

linuxserver.io

images include a directory you can put scripts in that will run on setup so you can customize the installation. After updating to the latest ffmpeg version it still didn’t work. After some more googling I found out that my cpu probably needs ubuntu 21 for driver reasons. Unfortunately linuxserver.io images are based on ubuntu 20.

I found this thread by ich777 which is a docker image that is optimized for hardware acceleration. It includes setup steps for NVIDIA, Intel, and AMD. One of the steps was to install intel-gpu-top which I had already done. So the only thing I had to do was swap the docker images and it finally worked.

Conclusion

The server has been running for a couple of weeks now and I am happy with it. It works very well as a media server for me. Jellyfin works flawlessy with kodi and for streaming outside of the house. The hardware is more than enough for what it is doing. Transcoding is more than fast enough for 1 stream. The only negative I have is that it can be somewhat loud if there is no other noise in the room. I’m not sure if there’s anything I can do about that, but it’s a minor nitpick at this point. In the future I might replace the default case fans with noctua ones.

Overall I am more than happy with my choice of building my own instead of buying a Synology I fully expect this server to last me years and years. Especially once I add more storage and services.